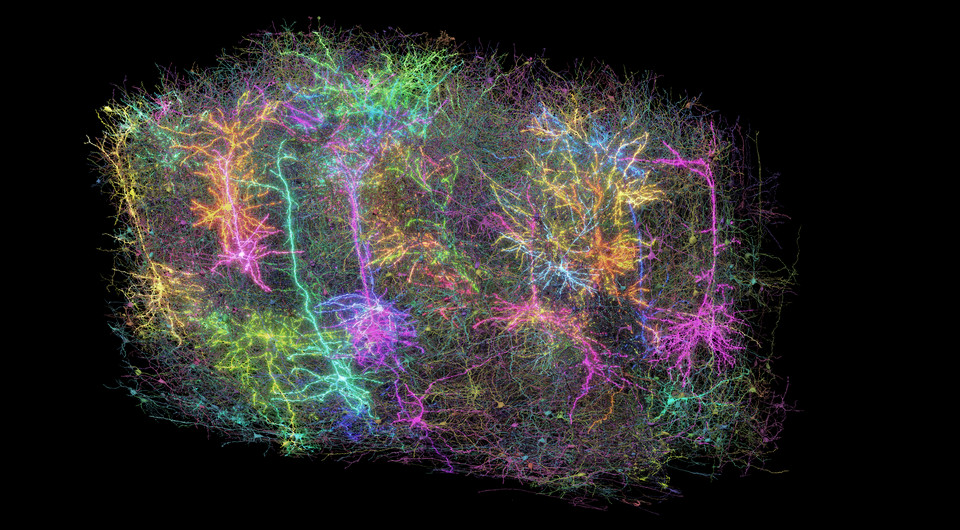

Scientists recently digitized a single cubic millimeter of a mouse’s visual cortex, a project that generated 1.6 petabytes of data to map 84,000 neurons and half a billion synapses.

To put that into perspective, the number of synapses in that tiny piece of brain tissue is comparable to the number of parameters in large-scale AI like DeepSeek or GPT models. It’s significantly more than the 29 billion parameters in a model like GigaChat. This comparison is a useful analogy for scale and complexity: just as synapses determine a brain’s processing capacity, parameters define the “power” of an AI.

At a fundamental, “hardware” level, this means an ordinary mouse brain is still more powerful than most of our artificial intelligence systems.

This raises an interesting question: what will happen when the computational power of AI finally catches up with biology? It’s not just about having more neurons; it’s about unlocking another dimension of thinking.

Current research is already shifting in this direction. The focus is moving from merely increasing parameter counts to truly understanding how natural intelligence works. The goal is to learn from biology to create genuinely artificial intelligence.

Frankly, I’m in awe. Every study like this represents a concrete step toward Artificial General Intelligence (AGI)—an AI that can think and learn as a human does.

Source article on the visual cortex reconstruction: https://nplus1.ru/news/2025/04/09/mouse-neurocube